Abdelrahman Shaker, Muhammad Maaz, Hanoona Rasheed, Salman Khan, Ming-Hsuan Yang and Fahad Khan

News

- (May 04, 2024): We’re thrilled to share that UNETR++ has been accepted to IEEE TMI-2024! 🎊.

- (Jun 01, 2023): UNETR++ code & weights are released for Decathlon-Lung and BRaTs.

- (Dec 15, 2022): UNETR++ weights are released for Synapse & ACDC datasets.

- (Dec 09, 2022): UNETR++ training and evaluation codes are released.

Overview

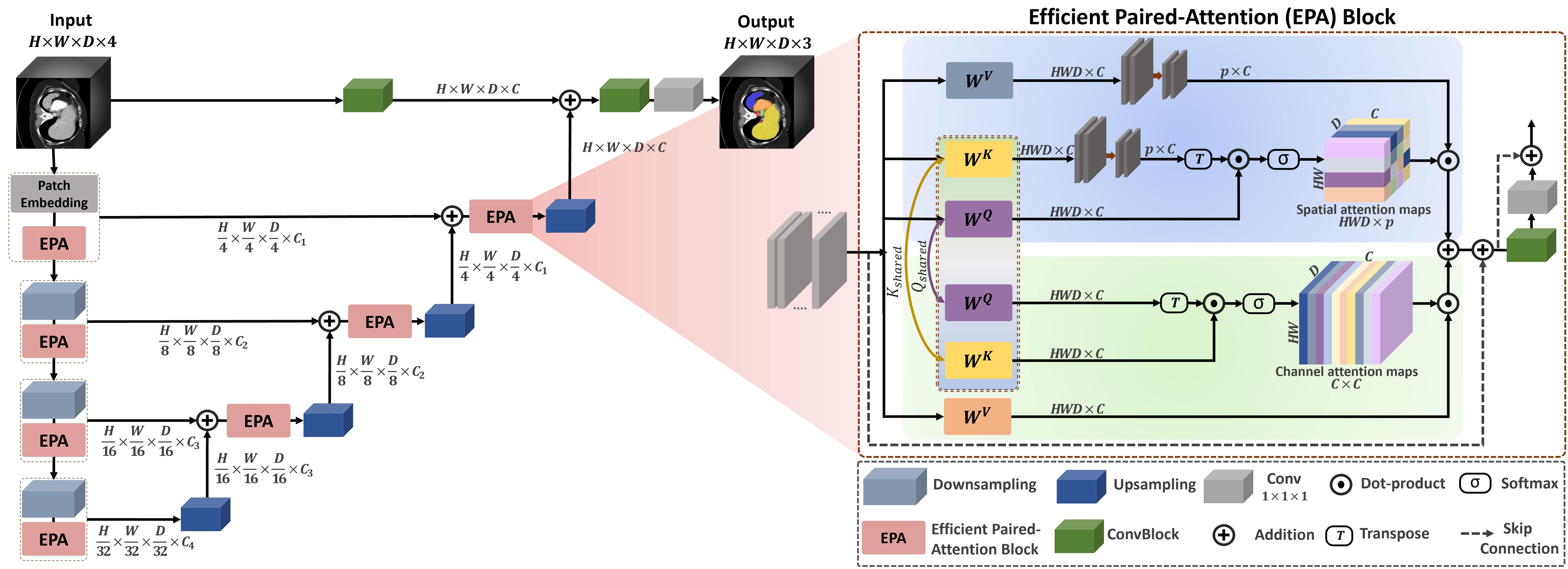

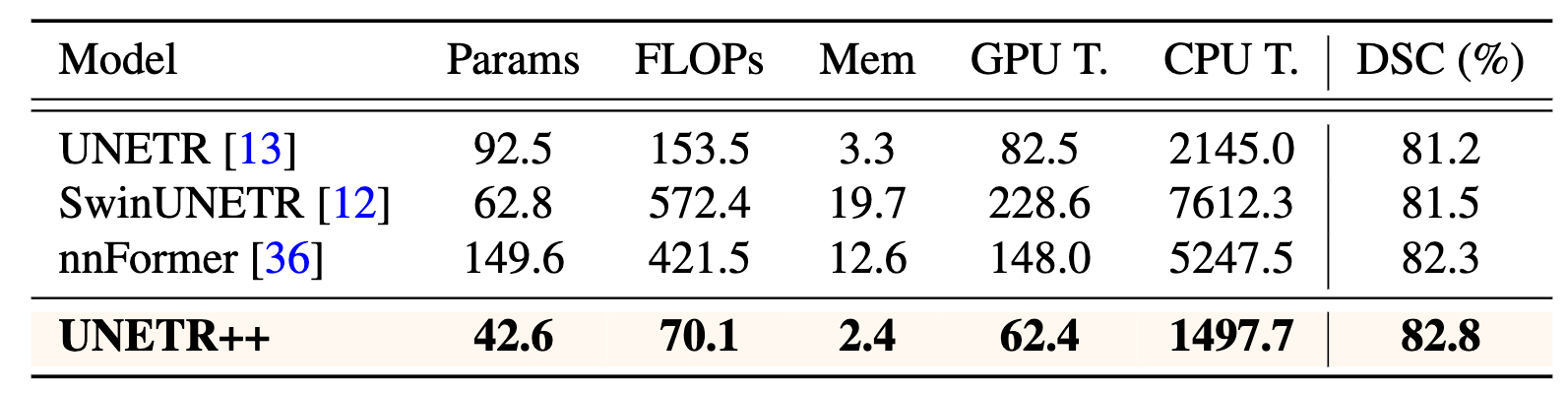

We propose an efficient hybrid hierarchical architecture for 3D medical image segmentation, named UNETR++, that strives to achieve both better segmentation accuracy and efficiency in terms of parameters and FLOPs. Compared to best existing nnFormer, UNETR++ achieves better segmentation performance while significantly reduces the model complexity by over 71%.

Abstract

Owing to the success of transformer models, recent works study their applicability in 3D medical segmentation tasks. Within the transformer models, the self-attention mechanism is one of the main building blocks that strives to capture long-range dependencies. However, the self-attention operation has quadratic complexity which proves to be a computational bottleneck, especially in volumetric medical imaging, where the inputs are 3D with numerous slices. In this paper, we propose a 3D medical image segmentation approach, named UNETR++, that offers both high-quality segmentation masks as well as efficiency in terms of parameters, compute cost, and inference speed. The core of our design is the introduction of a novel efficient paired attention (EPA) block that efficiently learns spatial and channel-wise discriminative features using a pair of inter-dependent branches based on spatial and channel attention. Our spatial attention formulation is efficient having linear complexity with respect to the input sequence length. To enable communication between spatial and channel-focused branches, we share the weights of query and key mapping functions that provide a complimentary benefit (paired attention), while also reducing the overall network parameters. Our extensive evaluations on five benchmarks, Synapse, BTCV, ACDC, BRaTs, and Decathlon-Lung, reveal the effectiveness of our contributions in terms of both efficiency and accuracy. On Synapse, our UNETR++ sets a new state-of-the-art with a Dice Score of 87.2%, while being significantly efficient with a reduction of over 71% in terms of both parameters and FLOPs, compared to the best method in the literature.

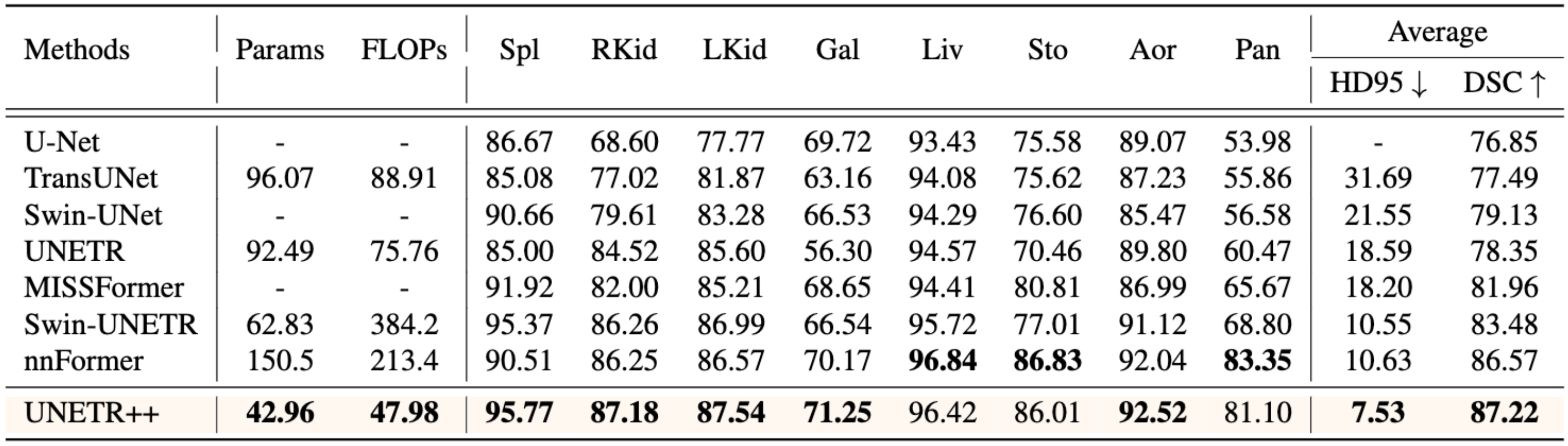

Comparison with SOTA (Synapse)

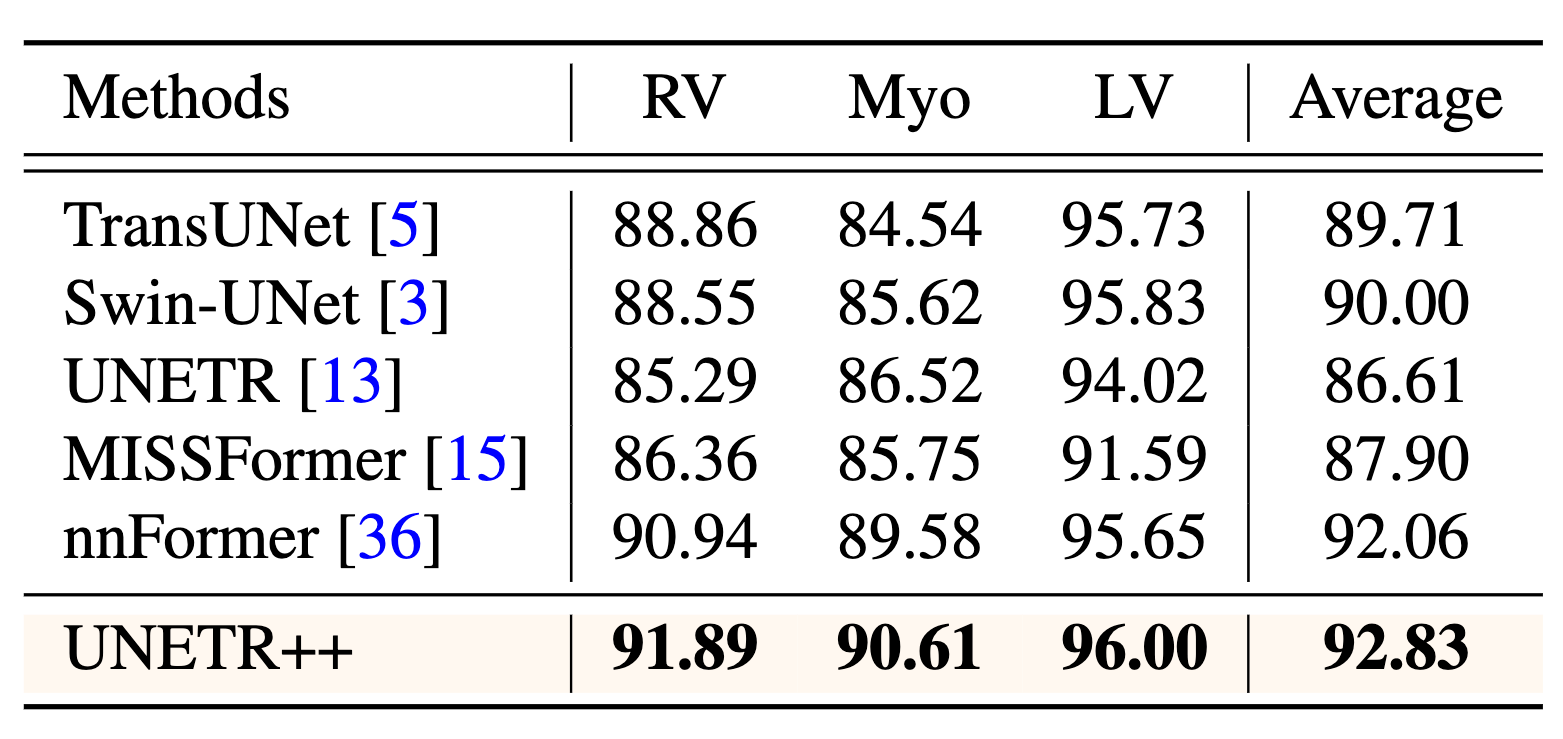

Comparison with SOTA (ACDC)

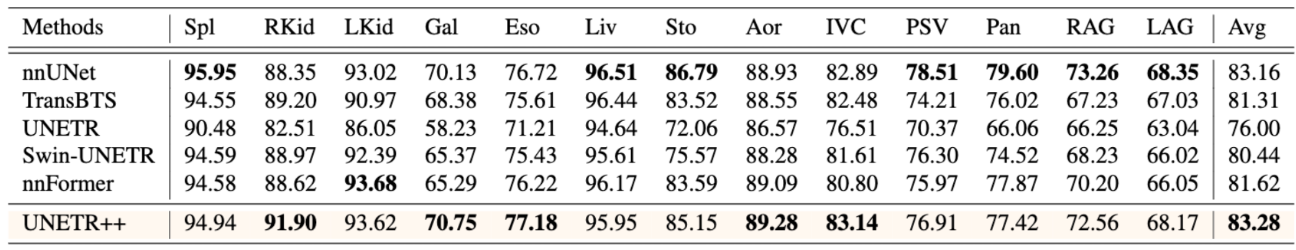

Comparison with SOTA (BTCV)

Comparison with SOTA (BRaTs)

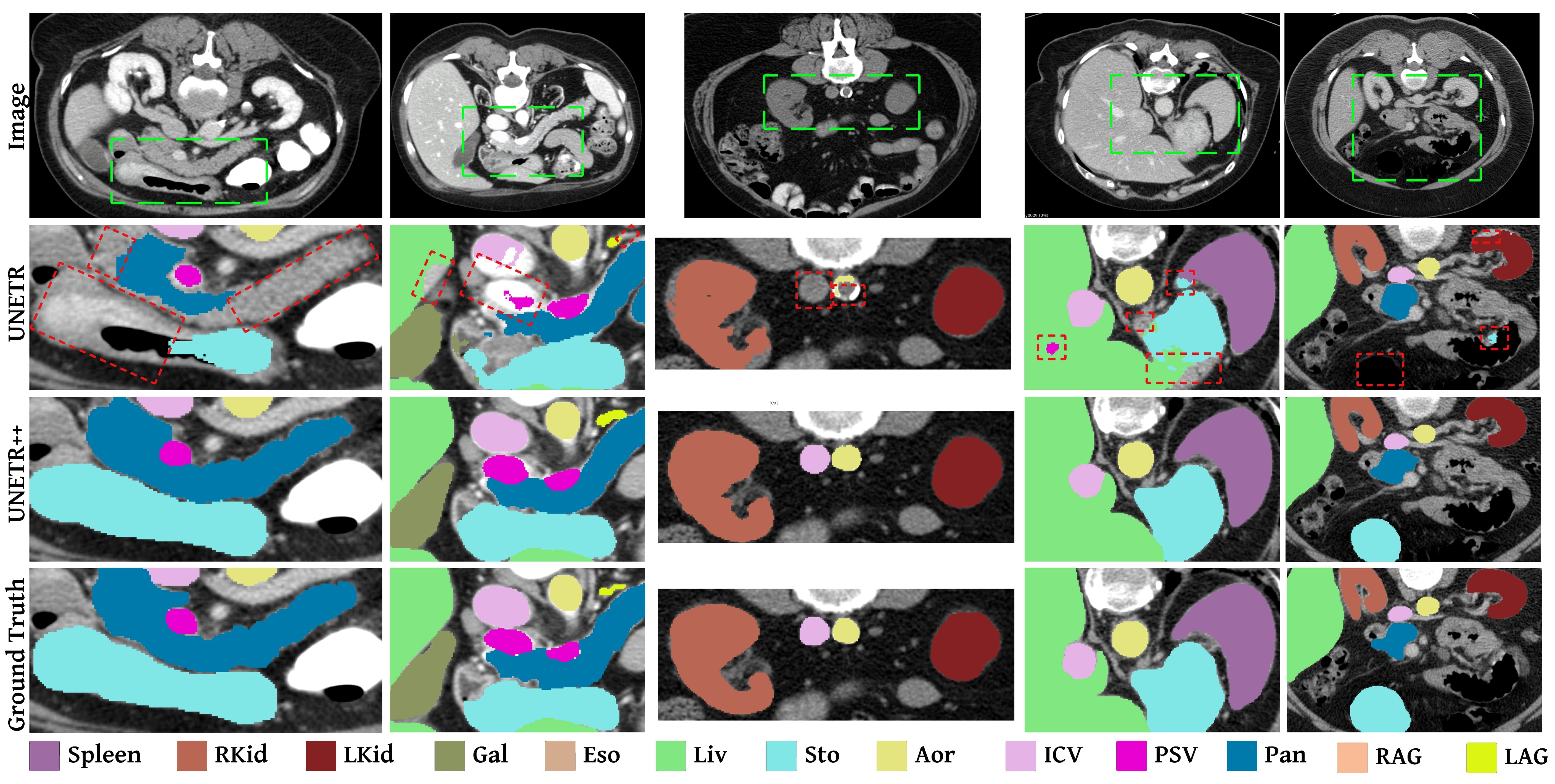

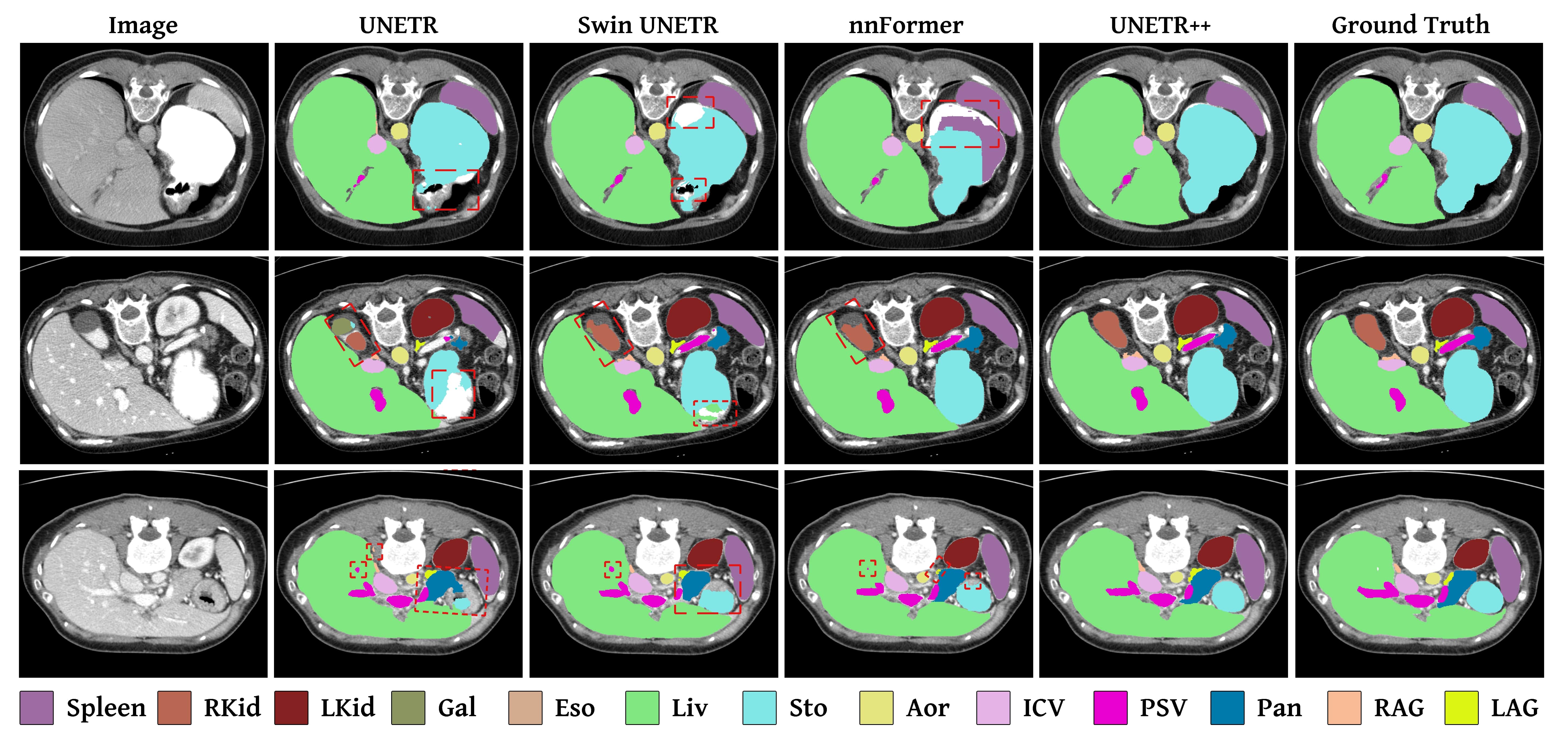

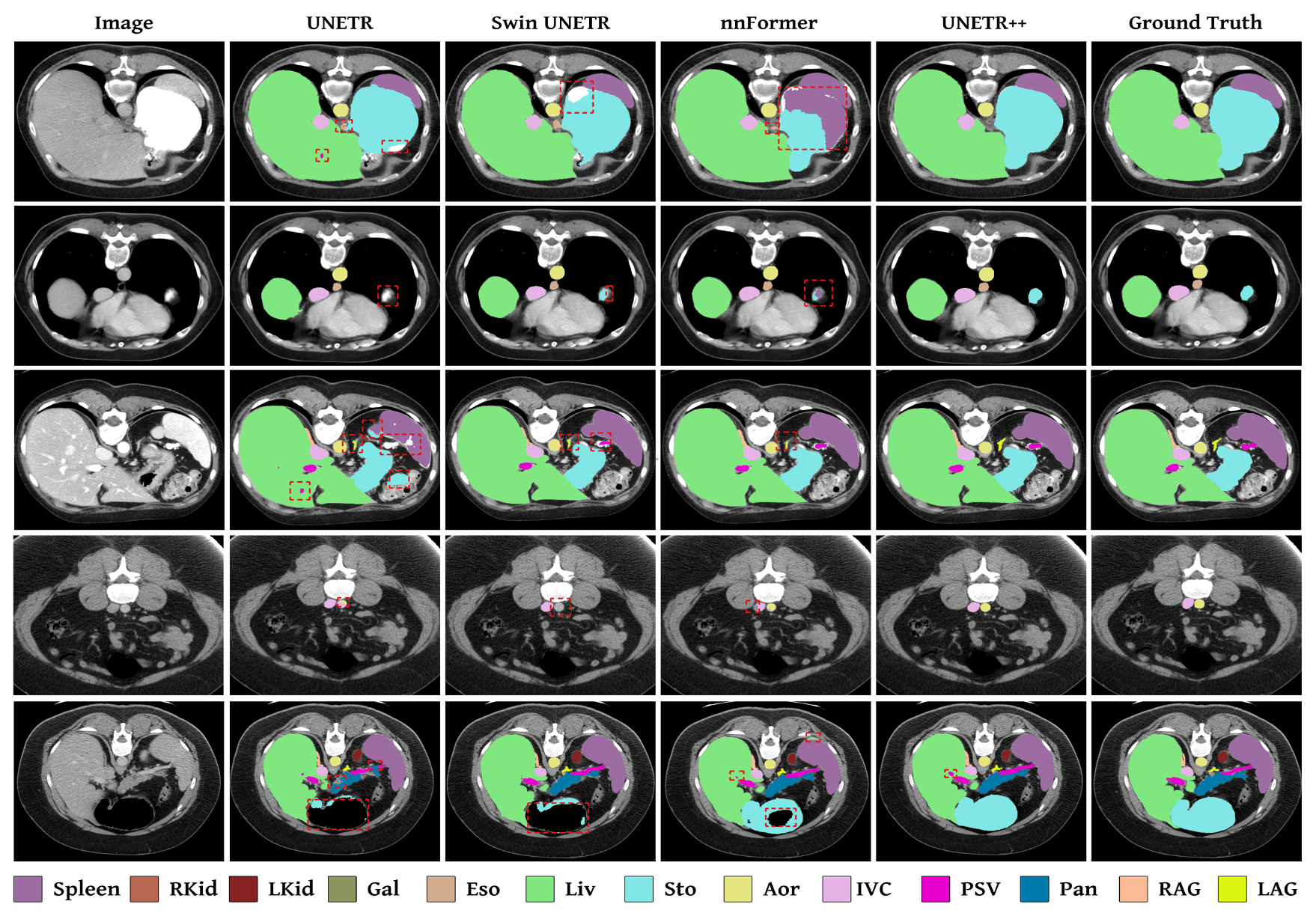

Qualitative Baseline Comparison (Synapse)

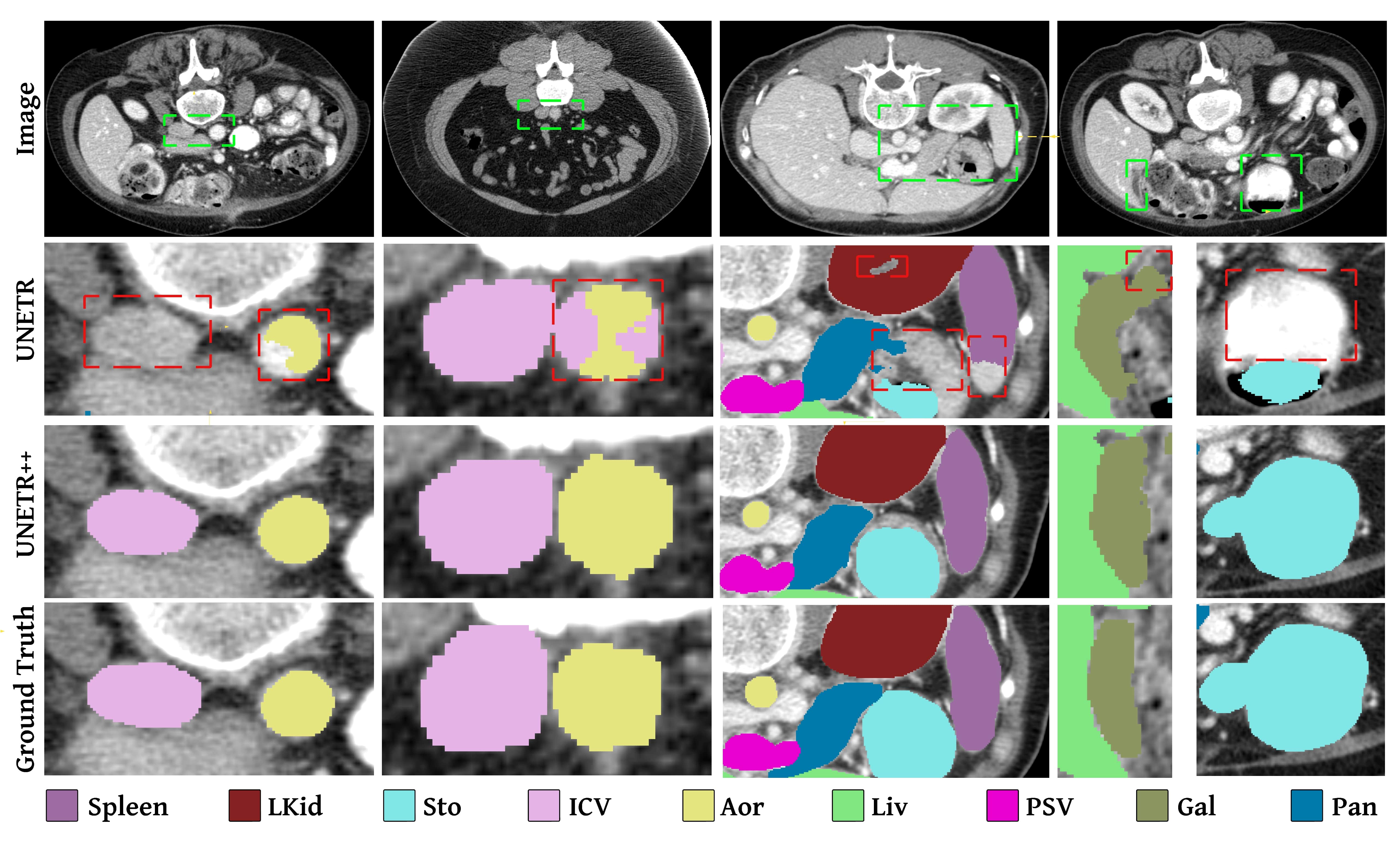

Qualitative Results (Synapse)

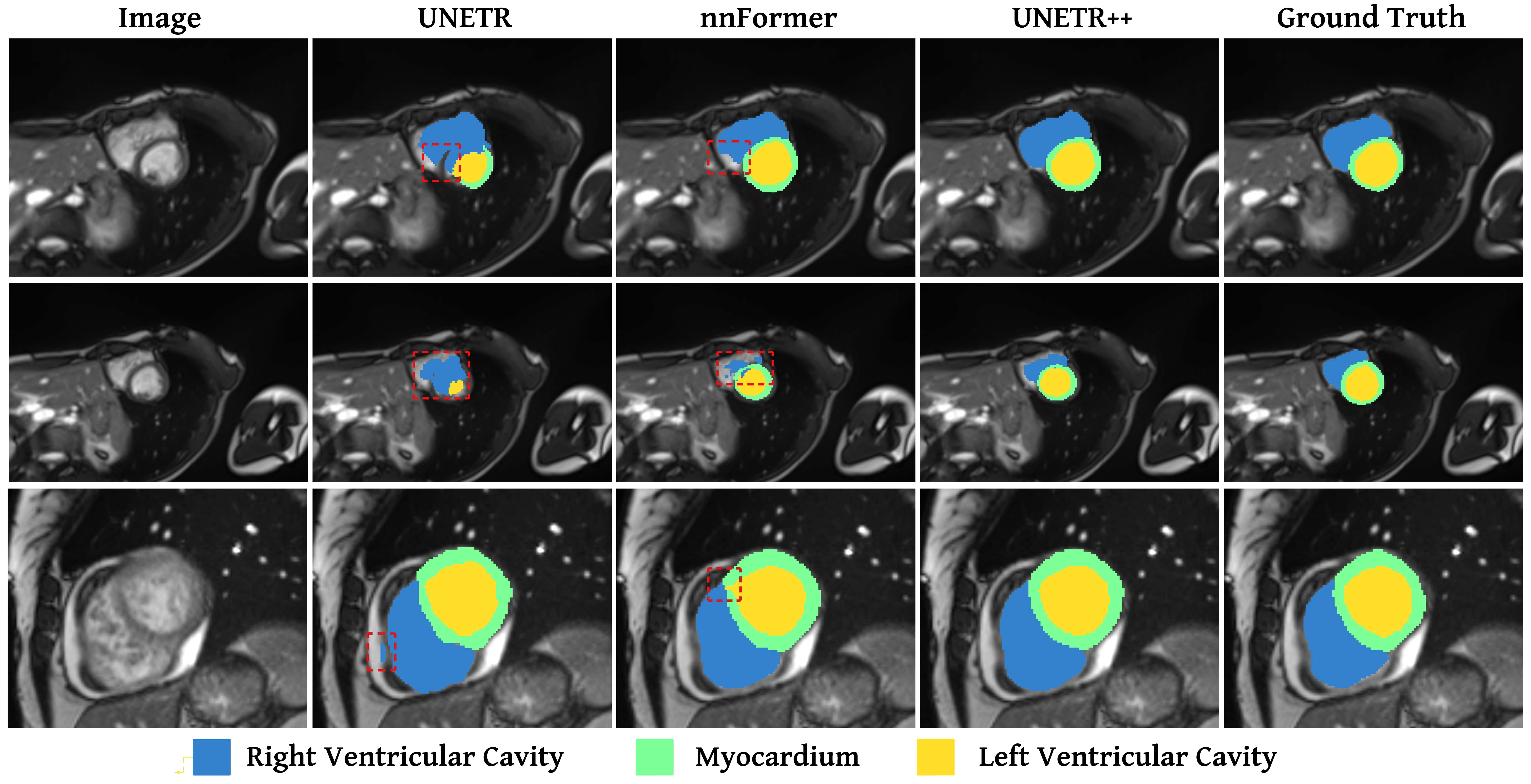

Qualitative Results (ACDC)

Citation

If you use our work, please consider citing:

@ARTICLE{10526382,

title={UNETR++: Delving into Efficient and Accurate 3D Medical Image Segmentation},

author={Shaker, Abdelrahman M. and Maaz, Muhammad and Rasheed, Hanoona and Khan, Salman and Yang, Ming-Hsuan and Khan, Fahad Shahbaz},

journal={IEEE Transactions on Medical Imaging},

year={2024},

doi={10.1109/TMI.2024.3398728}}

Contact

Should you have any question, please create an issue on this repository or contact me at abdelrahman.youssief@mbzuai.ac.ae.